Concept of blocking directories in robots.txt [closed]

want to improve this question? Add details and make it clearer what problem is being solved by editing this post .

Closed last year .

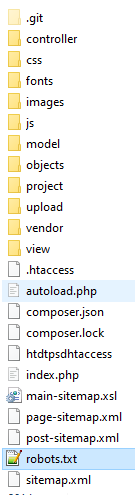

improve this questionI have a question regarding blocking files and directories through robots.txt. The structure of my site consists of directories with backend files (models, controllers and classes), which are responsible for generating the content.

Follows the structure:

The user's navigation will always be through the index.php, which will trigger the backend files of the other folders and generate the content.

Robots.txt:

User-agent: *

Disallow: /admin/

Disallow: /.git/

Disallow: /project/

Disallow: /model/

Disallow: /objects/

Disallow: /controller/

Sitemap: https://www.detetiveparticularemsp.com.br/sitemap.xml

It is necessary(suggested) to lock these files/folders of content processing(backend) in robots, leaving only images available, css and index.php?

2 answers

In the context of WebCrawler, crawler is a program that sends requests to domains for the purpose of optimally checking, indexing, and organizing resources available for future access.

One of the reasons for having a robots is to avoid unnecessary requests to your application by consuming resources.

Whenever a given url is indexed it will be monitored with a certain frequency consuming resources (from both client parties (in this case the webcrawler) and server (in this case the environment of your application)) each time it is requested.

So, yes, it is good practice to inform webcrawlers that there are resources that should not be indexed.

The client names a User-agent, which when having access to the Disallow: / statement of a robots.txt, understands that it should not Index anything inside from directory, however, if then there is an instruction like Allow: /esse-sim it will Index.

As stated in the comments robots.txt reads files .txt in order to find urls to be part of your index, who uses css is your application, who uses js is the browser that is also a client like webcrawler, but is prepared to search for js, different from webcrawler that only wants the urls.

See, webcrawlers are created for various purposes.

When you tell a robots.txt statement, it doesn't mean you're "blocking access to the resource", you're telling it there, it has nothing relevant to the context that its application proposes itself.

This means if a chinaspider, wants to see if your application, contains some vulnerability in /wp-admin, even with a statement denying in a robots.txt, it can still request the resource and index in its own index.

In the context of Google, it has its own users-agents, for various platforms and purposes, which due to its specific purpose, should thank those informed of the presence of irrelevant features.

robot.txt only prevents the search engine (from Google) from accessing the content, not the normal browser. And yet only if the seeker wants to do this. This is not a universal protection, it is a" gentlemen's agreement", if you are thinking of this as protection, forget it.

The search engine will ignore everything in the folder where to have this file and will follow the instruction indicated inside it than else ignore. That is, you only use this on content that you do not want the search engine to index. It that means you wanted to make this content public but you don't want it to be indexed. This alone is no use.

Understand that what you have within the framework of your website is not necessarily accessed publicly, by the search engine, browser or anything else. But it can be accessed if the content is public. Access will be given according to some triggers, for example having a Link to the page there. Another example is to have a sitemap as it did. Greater reason to have a sitemap is to want the content to be properly indexed, so putting the robots.txt doesn't make the slightest sense, either has one or has the other, they oppose it.

If what you want is to have none of these files accessible then you should configure the HTTP server appropriately so that these files are not public, or at least that they cannot be read directly and can only be executed. It is common for people to use ready-made settings that already fix this. If you do not know how to do it properly then I suggest hiring a professional to do this for you.

If everything is set up correctly then your site will run the .php files internam ente on the server and generate an external content sent to whoever requested it. The file will not be read directly, no one will pick up its code (if everything is set up correctly).

As you put a robots.txt then by this folder will not be read (nor the index.php will be called, your site will never be indexed. And as I said in comments that only index.php is accessed and that it is content that appears to be public, and still has a *sitemap*, a sugestão é só tirar orobots,txt`, it is preventing Google and other search engines from indexing their content.

The impression it gives is that it is protecting some folders from being accessed in any way with ronots.txt, but this does not happen. The protection is given by configuration of the HTTP server (Apache, IIS, etc.) and the permissions given to files and folders in the filesystem of the operating system.