Explain the SVR algorithm

Can anyone explain to me the algorithm Support Vector Regression ? (I use is from scikit)

I've taken a look at some sites but I'm still not getting it right.

1 answers

Decision Process: Selection of characteristics and classification

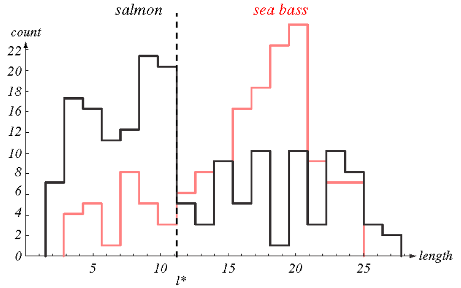

Imagine the following example (taken from the classic book pattern Classification ). You need to develop a system to automatically separate (sort) two types of fish, salmon (Salmon) and Whiting/Sea Bass (Sea Bass). The question is, how to do it? If you consider measuring the length of the fish (somehow it does not come to the case here), when making an analysis on the size from several fish samples (which you collected yourself) you can come to the following result (the source of the images is also the book referenced above):

This graph is a histogram that shows the number of samples (on the Y axis, or count ) that contains a given length (on the X axis, or length ). It can be noted that in these samples (and this is important! more on the subject later) salmon always have a length greater than Whiting up to approximately 11 inches, and Whiting has - in general-longer length than salmon above that.

Given this observation, you could easily make a simple if in a program to decide if an observed fish is a salmon using the value that best separates the two "classes". In this graph, the value is 11 inches, and the dotted line is called the "decision boundary" (decision boundary). Your program would work like this: if the length measured to be smaller than 11 inches, it is a salmon. Otherwise (if the measured length is greater than 11), it is a Whiting.

This classifier program can work well in many cases, but you should note in the chart that there are quite a few chances of error. Values exactly at 11 inches are difficult to differentiate and even having more occurrences of Whiting with length 17, for example, still there are quite a few examples of salmon with this size. In addition, above 22 inches salmon happens to have a larger size. More IFs could be used with other decision borders, but still in these areas it would be very easy to occur classification errors. Perhaps there are other better variables.

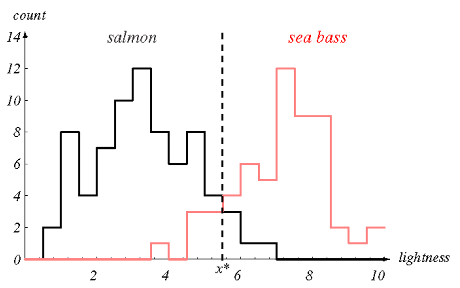

In fact, if you are able to measure the lightness (lightness ) of fish skin color, you will observe the following:

That is, with this variable or "feature" (in English called feature ) is much more easy to differentiate salmon from Whiting (with just one IF!). Still, there is some confusion in the region of the luminosity value close to 5.8 (I do not remember the unit used in the example), which is precisely the best decision boundary for this characteristic.

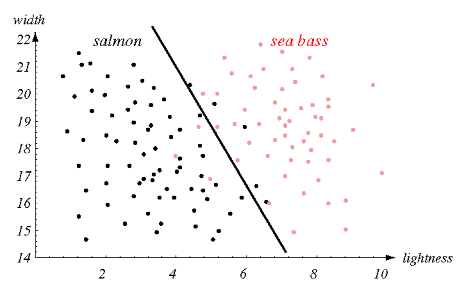

But, what if we use the two characteristics, is it easier to decide? See this other graph where both characteristics are presented on the two axes, and each sample( that is, each fish) is presented as a point in this two-dimensional space:

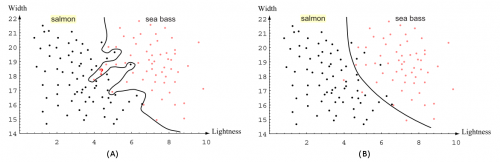

The dividing line is also called the decision boundary. It separates the fish reasonably well from the samples used to generate the classifier (so they are called "training data"), but you can see that there are still some errors, which may be due to measurement failures of the characteristics or to individuals were of the standard (outliners) that may be ignored. One could try generating a classifier Exact (figure a below), but it would probably be too fine-tuned to sample data (which may be unrepresentative or contain sampling errors) and error by overfitting (something called overfitting or overfitting). The ideal is to generate a model that approximates the real world from the training data, and not merely replicates it. Perhaps in this example it is more interesting, rather than a linear separator (a line or a plane), to use a polynomial separator (as a curve close to an equation of the third degree, for example, as in Figure B below).

It should be clear that by using two characteristics the result is a dispersion of the samples in a two-dimensional plane (X and y axes, one for each characteristic). Thus, the decision boundary is still a line (in the previous examples, using each characteristic individually, it was a point in fact - the value of the IF). It should also be easy to realize that when add one more characteristic, this graph would become a three-dimensional space, and the decision boundary would become a plane separating the two classes. With more characteristics, the dimension of the problem would continue to increase, and so this decision boundary is generically called hyperplane.

The use (or need for use) of many characteristics is not always good, because it raises the dimensions of the problem and makes it difficult to find the decision boundary, generating the call dimensionality curse . Therefore, the process of analyzing and selecting the best features ( feature selection ) is very important.

Classification and regression

These examples are about learning a decision boundary from training data (the problem samples) to sort future unknown samples between two distinct classes (let's call it A and B). But there are others types of problems where you do not want to sort the new data, but rather calculate an output value from the input characteristics. The process is more or less the same, but the decision boundary is used as the approximate function that generates the results. The linear regression is a classic example of this type of problem, in which from a set of data one "learns" an equation that can then be used to calculate the result from a new sequence of parameters. This matter is taken up further.

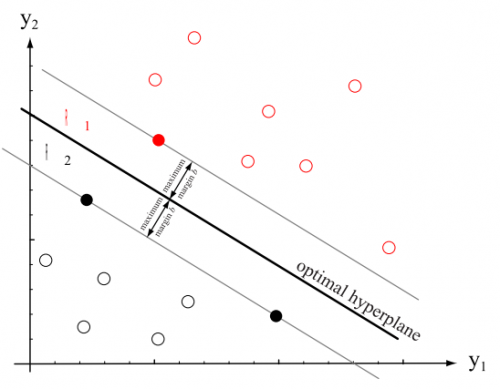

The Support Vector Machine

A Support Vector Machine (or Support Vector Machines = SVM) is an algorithm built to find the best decision boundary (sometimes called a decision surface) that separates two single classes (A and B) from the training samples. It does this by a continuous optimization process in which it seeks to find the hyperplane that best separates the two class. This hyperplane is the one whose distance (in the graph below called maximum margin B) from the examples of the classes is as large as possible (so as to be as well separated as possible!). Note that not all examples of classes A and B are necessary to calculate this distance and obtain this hyperplane (only those closest to the area of confusion), and it is precisely these points (or vectors, in geometric terms used in the mathematical foundation of the algorithm) that import. They are called "support vectors" because they characterize the separator hyperplane, and it is precisely dai that comes the name of the algorithm.

Note: the distance (with signal, once a positive value is one side of the hyperplane and a negative value is on the other side) from a sample to the hyperplane separator is called score is can be understood as an indication of how relevant the sample is the class classified (i.e., the further from the plan is a sample, the more strongly it can be believed that she percent to the class of that "side").

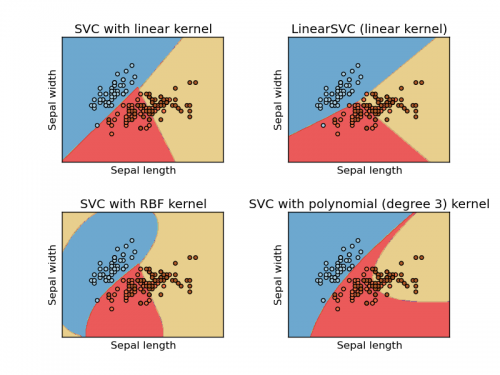

I'm not going to go into detail in implementing this optimization process because it requires a lot more information (and this answer is already running away from the SOPT ideal even without it! hahaha), and also because you don't need to know such details to use libraries like Scikit-Learn (Python) and libSVM (C++). What you need to know is that the Kernels used by SVM serve only to allow flexibility of the decision boundary, allowing other options besides a linear separator. Also that for the classification between more than two classes, the algorithm simply uses a set of binary classifiers, trained for each combination of pairs (1, n-1): the samples of Class A are considered normally and the other samples (of all other classes) are considered as samples of a Class B - this individual classifier only indicates whether or not a sample is of Class A - with one of these for each class, one can separate them by the best response among the classifiers for each sample.

Scikit-learn, for example, has some kernels that allow very useful nonlinear hyperplanes (the example image is from the scikit-learn website itself, illustrating the separation of three Orchid classes from the classic databaseIris Dataset):

SVM and regression

SVMs are designed to serve as classifiers. However, they can be used for regression by taking an additional training step in which the regression values are estimated from the probabilities of relevance to the classes obtained with additional tests (and thus the training takes much longer). This procedure is called Platt Scaling and was proposed by John C. Platt in the article Probabilistic Outputs for Support Vector Machines and Comparisons to Regularized Likelihood Methods. Scikit-learn, for example, differentiates methods into separate classes: SVC is the (normal) classifier and SVR is the regressor, using Platt Scaling.

The basic principle of Platt Scaling is as follows:

The SVM is normally trained from the input data, generating a classifier capable of predicting the class by indicating a value of score with sign for a new test sample (negative scores indicate Class A, and positive indicate Class B, for example).

Using cross-validation K-Fold, several tests are performed to predict the result with the different samples. Depending on the quality of the classifier, it will hit some times and miss others, and such results are accounted for. How to do various tests with partitions of the data itself training (such as K-Fold), the algorithm naturally takes longer to execute.

With the proportion of hits in the tests, a probabilistic model (usually using the logistic function) is constructed (or "learned") by adjusting the parameters of the logistic function, so that the result of the classifier ceases to be a binary indication y = sign(f(x)) (i.e. y = sign of the value of score of Class A or B, given the observation of characteristics) and become a probability P (y=1 / x) or p(y=-1|x) (that is, the conditional probability of the sample being of Class A, or B, given the observation of x).

This probability is a real (infinitesimal) value and not merely a sign. It really represents a probability that the prediction is right given tests performed with the classifier, and not an exclusive distance from a single sample. Thus, this new program is the equivalent of a regression and can be used to predict an outcome rather than merely indicating a binary classification.

P.S.: one might ask: Why do a logistic regression with multiple tests instead of directly using the value of the score (and not only its signal) as a regression value? The answer is that this is due to avoid overfitting. The score is a very individual measure of a sample (its particular distance to the hyperplane), and the probability based on several tests decreases the effects of bad samples (due to measurement errors, for example).