How does a computer understand binary code?

How does a computer understand binary code? How was binary code created and who created it?

2 answers

Concrete part

As the name says the binary is just a bit sequence, that is, some state indicator on or off. At the time of its execution are only low and high voltage electrical pulses (high is a way of saying, in fact it is quite low, it is only a little higher, and low is almost zero).

All this goes through logical ports (they are electronic equivalents of relays ) that do some operation, in general:

- inverting or sign of 0 or 1 (

not), - keeping the state (

buffer), - resulting in 1 when at least one of two signs are a (

or), - or in 1 if the two signs are 1 (

and), - plus some variations of these.

As the bits pass, they excite these "relays" according to the logic ports and determine which path these and other bits go. Decisions in the processor are the doors opening or closing according to the pulses electrical (data, information) go through the doors.

All the processor knows how to do is this. A set of interconnected ports in specific order will produce specific actions.

Think of ports as very simple (but very concrete) instructions that put in a certain order form a program, an algorithm, to do the most basic things (an addition, which is not even the simplest to program, but the simplest to understand), even some very complex in modern processors or more specific use (an encryption or vectorization, for example). This varies by architecture (see below).

The processor has a instruction set .

Bits pass through these ports once per Hertz . On a 3.0 Ghz processor they pass 3 billion times per second (a lamp usually "flashes" 60 times per second). Some instructions need to go through several times to execute by complete. Even an addition can not be carried out in a single pass (cycle).

A processor is a huge state machine.

Modern processors have billions of transistors (logic ports).

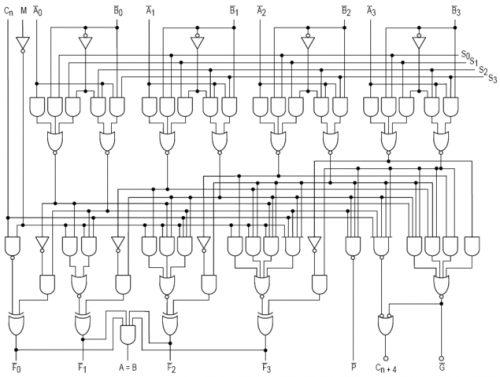

Example scheme of ports (obviously this is a representation for a human to understand better):

Abstract part

So every processor in the background has "programs" inside them made with electrical mechanisms that in some cases are called microcode. Part of what you have there is to control how these programs should work. One part is to make a memory content go inside it, understand what it's about and do something. Some content is such binary code. Then a few bits go into a specific place and trigger a part of the processor that should do something.

There was a time when programming the hardware itself, that is, ia arranging doors or ready-made sets of doors to do what was desired. It happened in the 40s when modern computing started, and it happened a lot until the 70s, now it happens little, but it still happens in niches.

The creation of a processor, simply put, is a mixture of finding and manipulating the right materials to give the desired properties (speed, dissipation, etc.) and create" programs " within them that will perform the most basic things.

Some bits indicate that the processor should pass control to the "internal program" that makes an addition, for example. The following bits are the values that will be used to sum (simply are some or ports with a recess to take care of the "go one") and the result is placed in an area of the processor for another instruction to do some other operation, possibly send to memory.

Some architectures have variable-size statements (from 4 to 120 bits in the Intel, for example) typically in CISC , in others the instruction size is always the same no matter what it is (word size, 32 or 64 bits, in ARM), typically in RISC.

Simply put, the first has the advantage of saving space, the second has the advantage of performance and efficiency, although there are techniques to compensate for one or the other, so it is more complex and today one helps the other to improve.

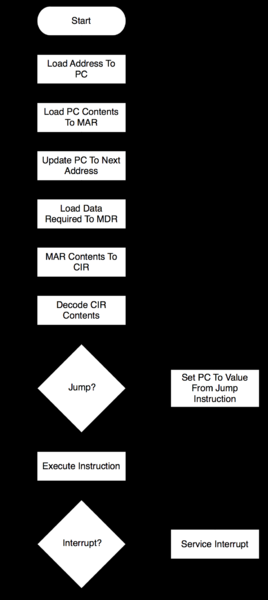

Now, How does he get these bits and know what to do? It has" little programs " controlling it. This is the instruction cycle (load, decode and execute ). See for a more detailed explanation . From Wikipedia (typical in CISC, in RISC the instructions have a simpler loop):

- calculation of the memory address containing the statement

- statement search

- decoding the statement

- calculation of the address of operands

- search operand Fetch

- operation execution

- storing the result in a memory address or logger

A number of specific controls are used to make it all work, typically: PC, sea, MDR, IR, with, ALU, MMU , just to stay in the basics. Modern, general-purpose processors have parts that do much more than that to optimize operations and provide other functionality.

In more general processors there are some more specific parts to help the operating system work, such as process control, memory, interrupts (signaling), protection, etc. Some more specific for certain types of application, especially on more modern and powerful processors, an example is virtualization.

I stay here because the question is a little broad and almost out of scope (but far from being amenable to closure).

Obviously I made some simplifications, do not take everything to the letter and did not even talk about the communication of the processor with the memory. You can ask more specific things for the developer to understand what they are doing.

Incidentally, it's interesting to see how a language virtual machine works: CLR, JVM, Parrot, NekoVM and language-specific like Lua , PHP, Pyhton, Harbour, etc., because they simulate a processor in all this abstract part (those interpreted in a huge switch-case :) ).

Further Reading:

Complementing the subject,

In a general summary, for those who are floating, the logic of the binary code is

0 - > off

1 - > on.

Modern computing

Early "modern computers" organized data into " hole cards". Usually those who attended basic computer classes, learn about it. The hole cards were created in 1832 and later in the early 19th century was improved by a company we know today as IBM .

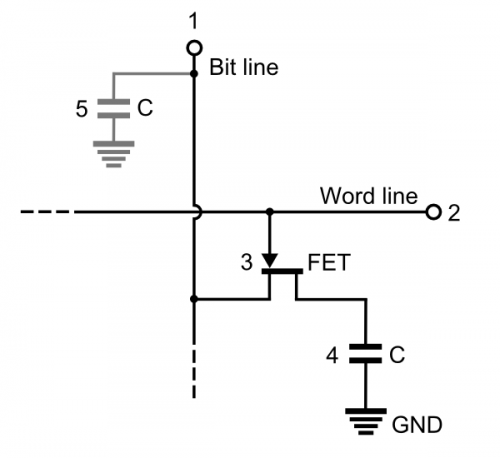

In 1946 the memory cells appeared. Composed of integrated circuits, formed by transistors and capacitors. This resulted in RAM memory (DRAM, SRAM) and several other devices such as the Flip-flop.

Origins

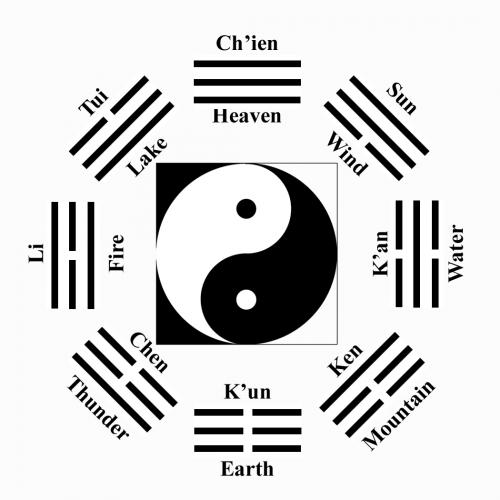

The creation of the modern binary code is attributed to Gottfried Leibniz .

His inspiration was the Chinese I CHING. Everyone the creation of the code is based on the diagrams of the code.