How is a compiler made?

Is a compiler programmed in which language? Are all compilers of a language the same or can one exist better than another?

1 answers

To not get too broad I will make a summary (is, this is a summary: P). And I'll make some simplifications. Certainly more specific questions fit to delve into some points.

Various techniques

Compilers, like any application, can be done in a variety of ways. There are several studied techniques that work best, but there is no unanimity of which is better. And probably each can be better in each language and different goals. This is one of the most studied subjects in computing.

What is known is that this is one of the most studied problems in computing, it is a very specific problem and that attracts a lot of people. And there is always News, recently there was a revolution in the way of building compilers, even if the basis is the same.

Some examples of ways to do one of the main stages of compilation can be found in another question my.

The parsing can be written manually (most good compilers do this) or it can be generated by specialized software, also described in another question.

Choosing the best technique can give a brutal difference in speed, quality of error detection, ease of maintenance, etc.

There are variations of how tree analysis is done, for example: starting from top-down ( top-down ) or bottom-up (bottom-up*).

Compilers they make extensive use of recursion. This is one of the domains where this is really quite useful.

Languages used

They can be written in any language, like any problem that can be solved computationally. The compiler of a language can even be written in the language itself.

Opa, how can this be possible? Isn't the egg and chicken problem?

This is called bootstrapping. The compiler of one language begins to be written in another language and then gradually or at the end of the process it is rewritten using the language it compiles. The problem was solved in the same way as the egg and the chicken, there was no first, there was mutation (the religious view is the non-explanation, that is, it is what today is called Good Practice, follow what I said and shut up).

One day only the machine code existed, it was used to write an assembly assembler (which is similar to a compiler, only simpler), which was used to create the first high-level language (mainstream), the Fortran, which was later used to create other compilers, and so on.

An example of this is the C#compiler which was originally written in C++ and more recently was rewritten in C#. C obviously did this and many languages today do the same even to show how much they are able. Low-performance languages avoid doing so because compilers need to be fast. By the way, most compilers are written in C or C++, since they are powerful languages, ubiquitous and allow high performance.

Its operation

The compiler takes a text, parses it character by character, tries to find patterns recognizable by a pre-set grammar , parses whether everything makes sense, and generates a dataset that it will allow the creation of the program to be run. There is not much secret, the basic idea is quite simple. Of course, the relationships between all elements of the language begin to complicate. The complexity of it is mainly due to the complexity of the language.

Compilation Phases can vary from compiler to compiler. And if it will be done in one or more steps as well (phase and step are different things, the first can occur separately but occur in my step). It it depends on the architecture of the compiler and the need for the language. So making a assembler is much simpler than a compiler. An assembler works with very simple rules.

Often the compiler is divided into front-end (takes more care of the source language) and back-end (takes more care of the target platform), and even middle-end, dividing some of the phases well.

Lexical analysis

A basic analysis ( scanning ) where it tries to find certain patterns is called lexical analysis. In it the search is for recognizable tokens. These tokens will be elements that can be used to produce some understanding in the code. Examples of tokens : operators, keywords, identifiers, literals, etc. If you find snippets of text that cannot be considered tokens already allows the compiler to generate an error syntactic.

At this stage each character will be read and see if it can be something recognizable. For example:

- he thinks a

i, knows nothing what to do with it; - after you find a

f, you still can't be sure that this is something useful; - then finds a space and can now discover that the previous

ifis possibly a keyword; - now finds a

x, and doesn't know what to do with it; - finds a

=and you still do not have conditions for a decision unless there is a possible identify before, you have to do a broader analysis to be sure; - finds a

=and you can't be sure what it is; - finds a

1, now he knows that what he had previously found was an operator, he can even identify which; - now finds a line break, and you can be sure that

1was a numeric literal.

Preprocessing

Here there may be pre-processing which is a very simplified translation of some tokens by others. It is a very simple exchange usually without major checks.

Parsing

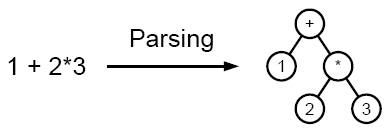

Then comes syntactic analysis ( parsing) which organizes these tokens all forming constructs that can be understood in isolation. He uses the method of dividing to conquer. Typically a tree of tokens is assembled called AST . In this process it is checked if the found construction is correct for what is expected in that language. If not, the compiler has to generate a syntactic error.

Good compilers do a deeper analysis to generate more accurate errors that can further help the programmer know what it is about without causing confusion. Between these analyzes it checks if in a place that expects a variable has this, if it expects an expression it is there, etc.

Using the previous example, at this stage he will see if the if fits in that place, if then comes an expression, as it should be, checks if the expression has a correct form, for example if it has an operator and two operands on each side, checks if there is nothing else that is interfering. Of course, this is a huge simplification of the process.

The idea here is to use the good old divide to conquer which is used in everything in the computing, and that is the best way to solve a good part of the problems even by humans (algorithms serve for humans too), and many do not know this (solve one part at a time), unless the problem is division, then the union is solution. Sorry for the offtopic but some humans have solved the problem of the masses with the divide and conquer, political and religious, for example, so it is easier and easier they rule the world, we are more and more divided

Semantic Analysis

Then comes the semantic analysis that looks for whether the relationships between the tokens are in line with what is expected for the language. It analyzes whether the data types are suitable in each place, whether an operation is possible, whether something needed to complete an operation was missing, etc. If something like this does not work out, the compiler will generate a semantic error. At this stage additional information will be collected to help with optimization and code generation. It can also better organize and simplify the syntactic tree.

By the previous example one of the many analyzes that will be done is that if the expression of the condition will result in a boolean, which is necessary for all if. It will also check if the operator == can be used with x according to its type and with a numeric literal. Note that these checks, although related and one dependent on the other are done separately.

If I had multiple operators would make the selection of Precedence, which would change the tree.

Example of Abstract Syntax Tree :

Typing analysis

May be a variation of semantic analysis. In complex typing languages it may be useful to have this separate.

Code Optimization

An optional next step is optimization. There some changes can be made to the syntactic tree (although the phase can try to modify another representation). These changes aim to make the code look better to save processing and memory, that is, to be able to perform the same operation, obtaining the same result, taking better advantage of the processing capacity of the target of this program.

Optimization techniques commonly used.

Code Generation

The last phase is code generation. Starting from the parsed and modified syntactic tree it is already possible to generate the output, which is usually code in another language, such as assembly or the machine code for a specific processor. In general it is a sequence of bytes that the processor or a virtual machine understands.

Using our example above, hypothetically, it could generate an instruction for the processor to do a numerical comparison (CMP) and then a conditional deviation (JZ).

In some cases it is possible that this generation is an intermediate code that can go through another optimization and generation of a final code. This technique greatly helps the compiler be built to suit several different platforms.

See a example of the compiler flow .

Other phases can be used, there is no rule that compilers need to be like this. Nowadays modern compilers are more modular so they can be used in other tools that need some of these phases alone. Great for syntax highlighters, refactors, static parsers, AOP tools, code generators, REPL, formatters, etc.

Variations

When a compiler generates code for another high-level language at this last stage, it is customary to be called transpilator.

When this phase executes commands instead of generating a binary or source code in another language it functions as a interpreter. Usually the interpretation works a little differently, since the compilation and execution take place in small blocks. But this has changed, interpreters are benefiting from a more global analysis.

I didn't even talk about other types like the Just-in-time compiler.

Conclusion

Every programmer should learn how a compiler works. Even if he's never going to write one. There is a huge difference in the quality of the programmer that he understands this (and other matters) and what he does not understand. You don't need to be a deep connoisseur, but you need to know what it's like to use the tools of your day to day in a better way. Just need to avoid the cargo cult.

I see many programmers who suffer a lot doing wrong things because they do not understand the workings of mathematics, languages, science, computer, operating system, languages and compilers, data structures and algorithms, paradigms, methodologies, etc. And the worst thing is that some, even though they don't know anything about it, think they know everything they need and don't need to learn more, to improve. Want to know what you're doing? If you dedicate yourself, don't think you already know everything. You always have something to learn. And compilation is one of the most fascinating areas of computing.

References

The canonical book on the subject is the Dragon's Book (considered obsolete by many).

Grammar example - C language - compiler example .

Modern compiler Construction [video].