How to webscrapping a site that has POST method?

I'm having trouble doing webscrapping for sites that use the post method, for example, I need to extract all political party related news from the site: http://www.diariodemarilia.com.br .

Below is a Schedule I made from a journal that uses the get Method to show what my goal is with this schedule.

# iniciar bibliotecas

library(XML)

library(xlsx)

# URL real = http://www.imparcial.com.br/site/page/2?s=%28PSDB%29

url_base <-"http://www.imparcial.com.br/site/page/koxa?s=%28quatro%29"

url_base <- gsub("quatro", "PSD", url_base)

link_imparcial <- c()

for (i in 1:4){

print(i)

url1 <- gsub("koxa", i, url_base)

pag<- readLines(url1)

pag<- htmlParse(pag)

pag<- xmlRoot(pag)

links <- xpathSApply(pag, "//h1[@class='cat-titulo']/a", xmlGetAttr, name="href")

link_imparcial <- c(link_imparcial, links)

}

dados <- data.frame()

for(links in link_imparcial){

pag1<- readLines (links)

pag1<- htmlParse(pag1)

pag1<- xmlRoot(pag1)

titulo <- xpathSApply (pag1, "//div[@class='titulo']/h1", xmlValue)

data_hora <-xpathSApply (pag1, "//span[@class='data-post']", xmlValue)

texto <- xpathSApply (pag1, "//div[@class='conteudo']/p", xmlValue)

dados <- rbind(dados, data.frame(titulo, data_hora, texto))

}

agregar <-

aggregate(dados$texto,list(dados$titulo,dados$data_hora),paste,collapse=' ')

#definir diretorio

setwd("C:\\Users\\8601314\\Documents")

# salvar em xlsx

write.xlsx(agregar, "PSDB.xlsx", col.names = TRUE, row.names = FALSE)

If it is not possible to solve my problem, I would like directions where I can find examples of programming with the post method.

1 answers

In this case you can do so using the package httr:

library(httr)

library(rvest)

library(purrr)

library(stringr)

url <- "http://www.diariodemarilia.com.br/resultado/"

res <- POST(url, body = list("Busca" = "PT"))

After that you can extract the data in the usual way or using the rvest:

noticias <- content(res, as = "text", encoding = "latin1") %>%

read_html() %>%

html_nodes("td")

# extrai titulos

noticias %>%

html_nodes("strong") %>%

html_text()

# extrai links

noticias %>%

html_nodes("a") %>%

html_attr("href") %>%

keep(~str_detect(.x, fixed("/noticia/")))

# extrai data

noticias %>%

html_nodes("em") %>%

html_text()

The idea to extract information when the site receives forms POST is to find out what information the site sends to the server.

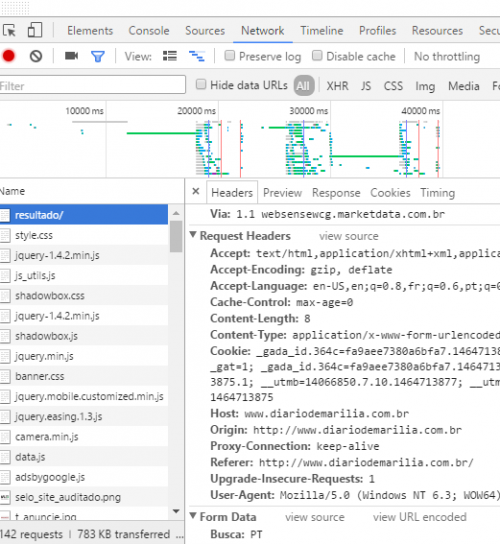

I always open the site using the Chrome grip F12 to open the developer tools and go to the Network tab.

Then send the form usually by the site and return p / a tab Network, and click on the first item of the list, in this case it is /resultado/.

Now, See below in the image the part form data, it is this information that you need to send to the server using the parameter body of the function POST of the httr.