MLP neural network training

Hello, I am developing a neural network for recognition of 5 geometric figures: Circle, star, Pentagon, Square and Triangle. The network is MLP type with 1 hidden layer (10 neurons), the input layer has 400 neurons, one for each pixel of the image with the geometric figure and the output layer has 1 neuron, which must reach a value for each figure: 0.0 - Circle, 0.25 - Star, 0.5 - Pentagon, 0.75 - Square, 1.0 - Triangle. The method of learning is backpropagation.

The input data is normalized to 0 and 1; 1 in the case for white color and 0 for black color, since the images are of the monochrome bitmap type.

For the training 27 patterns of each type mentioned above are used, drawn in a matrix of 20x20 px, resulting in the 400 neurons of the input layer. For the validation of the network, which is made in each training season are arranged 18 patterns of each type.

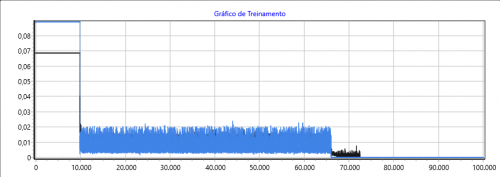

The problem is in training, where the network actually converges to a minimum error, but it becomes very unstable, according to the graph:

Other network parameters:

- learning rate: 0.1

- Momentum: 0.8

- neurons hidden layer: 10

I have tried to change the 3 parameters above, but without success, either the network becomes more unstable, or it takes a long time to start decreasing the error. On checking the images the network it recognizes the patterns, but I would like to make an early stop based on cross validation to ensure generalization of it, but for this it needs more solid values than those presented in the graph. Thank you now!

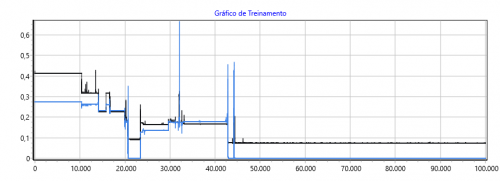

To adapt the network to the classification situation, I added four more neurons in the output layer, totaling five neurons, each of which was activated to a figure pattern, and the result of the error lines was the next:

Is there a possibility that I will improve a little more?