Parallelism and simultaneity

Reading a few things on the topic, I realized that they are not the same thing, so I would like to find out:

- What is the difference between parallelism and concurrency in processes?

3 answers

Parallelism and simultaneity.

Concurrency is often called concurrency since tasks compete with each other for a unit of execution, each task can only run for a handful of time. But there are those who define competition differently.

You only have parallelism when at least two tasks occur simultaneously. Really.

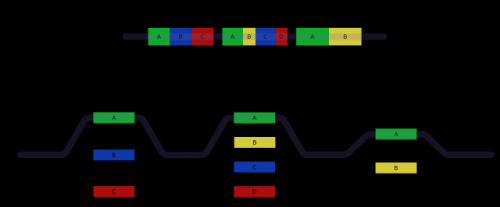

Note that the computer can give the impression of concurrency . In fact the term simultaneity it is used for perception, as the Wikipedia article linked above describes, even if it is not specific to computing. the difference is between being something real or just perception .

Perception occurs with the exchange between tasks. Each task runs for a short time and then moves on to another task. Because these exchanges occur so often (a few microseconds) for a human gives the impression that tasks are happening to the same time, but only one of them is run at a time. They are simultaneous, but not parallel. Concurrency helps decrease latency.

The computer can only get parallelism if it has some mechanism that actually runs more than one thing at the same time.

The most common is to have more than one processor, physical or logical. Even if it is logical there will be a physical support that clearly separates the two execution units. In this way a high is reached throughput .

The processor does not have to be the main one, some tasks are parallelized with specialized processors.

You can also achieve distributed parallelism using more than one computer. Obviously two computers can actually perform tasks at the same time.

Any application running on a computer with a modern and complete operating system (excluding some niche) can run in parallel, or at least simultaneously. In it is very common to have multiple applications running at the same time.

Two tasks can be parallel without being concurrent, they can be totally independent of each other. At least there is a definition that indicates this.

Can be seen in the diagram on the Wikipedia page about OpenMP .

Pure parallelism is not difficult to achieve. If it solves your problem it is the best of Worlds. But its pure form can only be used in very specific situations. It is difficult for an entire application to be able to be parallel by complete. If the tasks have to communicate already begins to complicate, if the communication is at the same time they are running possibly we already begin to have competition for the same resources.

Concurrency without parallelism is only useful in terms of perception or in cases where there is waiting for resources external to that of the execution unit. Since there is a cost of administration, make something simultaneous, but no parallel where there is no waiting just slows everything down. As we are talking about human perception, the benefit occurs even so since the user does not have to wait for a sequence of tasks to be executed, but it will take longer in the whole.

Concurrency is very useful in GUI, disk access, network, etc. If she can run in parallel even better. When there is no interaction with users (none at all) it can be much less useful if you do not have parallelism.

Applications that aim to be real time work best if they are serial or at least purely parallel. It is still possible to get close to real time if the competition can be well controlled, but not 100% guaranteed.

Competition

Some say that competition occurs only when these tasks are dependent on each other. There is competition when they compete for a resource that they both need to access in some way, in addition processor. It can be memory, disk, etc. In this case we are talking about simultaneous tasks that dispute who can access what.

Concurrency, in this context, can occur in parallel or only simultaneous tasks.

Concurrency, in this sense, is a computational problem that is difficult to solve in most situations. There are ways to make it easier, but if you don't know what you're doing it can have catastrophic consequences. And find out what causes the problem when something gives error is extremely difficult. It is a problem almost always not reproducible without well advanced diagnostic tools and a lot of experience. When you program yourself concurrently it is even more worth the assertion that you have to do the right, it is not enough just to work. The order of execution is usually not deterministic.

It is very difficult to maintain shared States in a way that does not incur deadlocks or livelocks, keep the consistency and atomicity.

Serial

For completeness, if everything runs on a single line it is called serial. Very rare nowadays, at least in most generic computing devices, but not so rare in well-specialized devices.

Conclusion

Has so much ambiguous, misguided and even wrong information on the subject that I hope it was not me who wrote something wrong. Surely you will have those who think wrong or right according to the which she believes is right.

I have seen quite accepted definitions that oppose these two lines of thought.

You must have been in doubt, as concurrency is more "seen" in the books as competition.

Concurrency can be seen as an operational state in which the computer "apparently" does many things at the same time. Parallelism is the ability of the computer to do two or more things at the same time.

The main difference between parallelism and concurrency is the execution speed. Competing programs can run hundreds of paths of separate execution and do not change the speed.

In the Book: effective Python: 59 ways to better program in Python by author Brett Slatkin, has a discussion about it.

Multiprocessing: Since its inception, computers have been seen as sequential machines, where the UCP executes the instructions of a program, one at a time. In reality, this view is not entirely true, since at the hardware level multiple signals are active simultaneously, which can be understood as a form of parallelism.

With the implementation of multi-processor systems, the concept of concurrency or parallelism can be expanded to a a broader level, called multiprocessing, where a task can be split and run at the same time by more than one processor.

Processor-level parallelism – the idea is to design computers with more than one processor that can be organized in a matrix, vector way, sharing bus with shared memory or not.

Since the concept of parallelism and simultaneity are very equal, it is concluded that there are no differences between the even, as mentioned in the small excerpt where the author portrays when saying parallelism or simultaneity.