The first programming language

Until the time when computers were purely mechanical and were programmed by punched cards I understand how it works. Then, when the first "digital" computers operated with valves and relays appeared, it became more complicated to understand how the programming was made. I imagine there should be something similar to a compartment with a relay array (similar to punched cards) pre-programmed, which contained a program fixed and single to run by machine. When the program had to be changed, the programmers just rearranged the valves and the machine continued to work.

How came this notion that we have of programming today that the programmer types codes in a text editor and compiles. I can't imagine how it got to that point. For example assembly, it is only translated from texts to binary by assembler, but poxa, you would need to have another programming language to if you create the assembler.

Suppose there are only computers controlled by relays and valves. Like someone could write a code in assembly since there was no text editor or anything.

The result of an assembly is another file in text format with 0's and 1's only? Or are electrical impulses sent to the valved machine.

2 answers

TL; DR

Some statements in the question use contemporary premises. Have to abandon all this to understand the functioning of "old"computers. And I doubt anything succinct will give a good idea of how computers worked. In fact this will only happen more concretely with a lot of study. It's a lot of variation.

0s and 1s can be stored and processed in various ways. Your input and output as well.

Would need to have another language of programming to create the assembler

Do not need. You can do by hand. It is not a pleasant task for a human being, but it was what he had. And that, I think, deciphers a lot of the doubt.

In the premise of the question there was no first programming language on computers. She only existed on paper. Someone would write what the program was supposed to run, turn right there into numeric codes and then they were converted to binary, all in hand, exactly as the computer does, except the conversion to binary because internally everything is already binary even. Internally the language was binary code.

So the Assembly, or a rudimentary form of it, existed only on paper. The fitter was human. The automated assembler came after, since it made no sense not to use the computer to automate a lot of problem, but not to automate your own problem.

High-level languages were born that way too. Tired of writing something understandable and then also write the Assembly to execute that.

The result of an assembly is another file in text format with 0's and 1's only?

In a way we can say yes, but it is not a precise definition.

Or are electrical impulses sent to the valved machine.

Everything in computers is electric pulses. But it is not easy a human being to generate these pulses on their own, so a device electromechanical is used to be generated. Keys are used to generate this. To this day we use keys to enter this data. In English key is key , which we call key. So a key panel is a keyboard, which we call a keyboard. These keys are arranged easier for us to use than Keys were in the early days.

Binary codes were entered by mechanical on and off switches. Yes, entered bit by bit.

Introduction

Digital computers, i.e. electronics based on 0 and 1 were created in the 30s / 40s. More or less along with the programmable computers , that is, it was a universal Turing machine. At least that's what the story tells. There are always those who can say that it happened a little different from the official story.

Initially All information was stored in a rudimentary form of memory (another example and the most used for a long time). In fact each computer tried a new shape a little different or a very different path, until a shape eventually prevailed. But the memory as we know it today only emerged in the 70s.

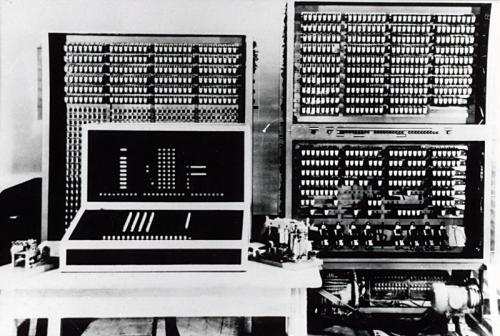

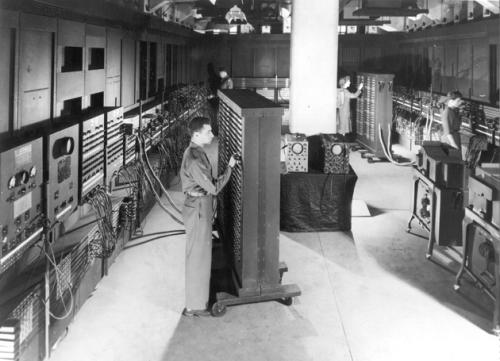

There is dispute about which was the first computer. For a long time ENIAC was officially considered. Even if it is not true it is important to understand evolution. We Wikipedia article has many details that help understand its operation.

The problem with the question is that it seems that there was a single event that structured everything. In fact there was a lot of experimentation, not all of them became so famous. Several paths were traveled.

The Mark I (one of the variations) was an important computer created by John Von Newmann , credited as the creator of the basic computer architecture "modern" . another variation evolution is also important. If you want details, follow the links. It is difficult to understand everything without picking up all the details.

There is a list of computers that started the story and which, as a rule, were hand-programmed .

Forget that idea of files, of texts. It was binary. Think concretely.

How did

There was even some point that someone "programmed" the computer rearranging valves. But this cannot be considered programming as we know it today. It was quickly realized that a more volatile form was needed. It needed the program to be entered in some way (and could even still be punched card), stored, and followed as dictated by Alan Turing's theory .

You should imagine that there was not much room for error. The programmer had to make sure that his code had no errors. Everyone needed be checked in the eye. Of course, at that time they only had good people working with it, they were careful because they knew they could not go wrong, and they mastered the whole process, after all they had invented everything, and, of course, they only did very simple things, by today's standards.

Data entry has nothing to do with programming itself. Just like today, programs are given with a special purpose. So if it was coming in with numbers to calculate or Numbers that instructed the computer on what to do, it did not matter, just as today if you are using the keyboard to send a message or to create a program.

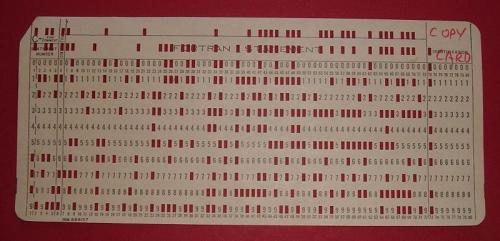

One of the problems of fiddling with the Keys is that at the time I was going to put another program, the current one was lost, obviously. Then they started using punched cards for the programs (or paper tapes). The cards were punched according to the bits that had previously been programmed on paper. Then it was never lost (a not by deterioration of the material, but it could be reproduced).

Of course the card was only used with the same input medium. He modified Keys as he had a hole or not. The specific mechanical process changed but the basic concept and operation was the same.

Note that this doesn't matter much. The abstractions created are what enabled the productivity of programmers. This is why higher-level languages were created. They were conveniences for human.

Also needed productivity in data entry. The gain is not so great, but important. Just as they had keyboards to punch the cards, at least in more modern versions of the punches, they realized that they could make the keyboard already change the programming keys. Of course they were no longer mechanical, at least not purely.

Punched cards were used in mechanical computers for input of pure data, not of programs that they didn't exist.

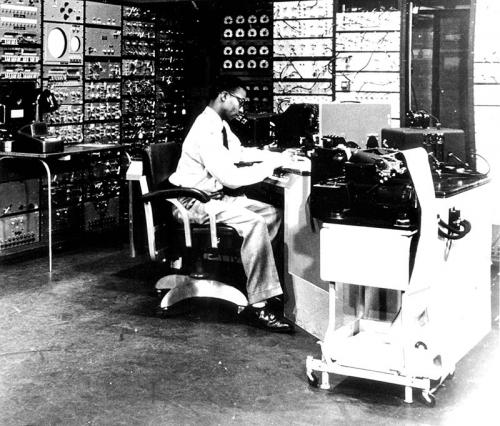

The image below starts showing the computer as we know it today. This was already the middle of the 60s. There was the input by keyboard, the output by printer or video, storage by magnetic medium (which is already becoming obsolete), still sequential, although perhaps it has a random medium somewhere not visible. I had little because it was too expensive.

See how what is considered the first micro-computer in 1975 was so simple that it worked like the first modern computers of the 40s. He could have an optional keyboard, I do not know if right in the first version.

See his simulator . Try programming.

The first language

In the sense of the question I believe that the binary code is the first programming language . Although it is highly likely that something more abstract existed before.

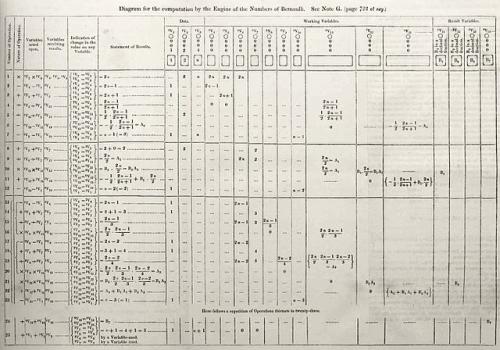

I have no evidence, but it is possible that at least one form of Assembly was used as the first language of these modern computers, but not used in computers, only on paper. If you are to consider what was done on paper to build computational machines, then you have to see what Ada Lovelace used, Note G, as shown below.

High-level languages have been created initially to give more cognitive power to programmers. But it was also a way of not depending on the specific instructions of a computer and being able to be understood by any computer, given a transformation, manual or not (at that time assemblers were already used and the creation of a compiler was the natural step).

Conclusion

Everything is evolution. You have to take one step at a time, and there are many. You have to create the egg first and then the chicken. Or would it be the contrary? Fortunately this dilemma does not exist in computing, first came the binary (which is already an abstract concept), then the numerical code (which is a higher level), later the text with the Assembly and only in the 50s appeared the languages more abstract and close to what we humans understand.

To show better I will have to go deeper, I would have to show each step. It gets too long. The links already help you search further. Can not be lazy to click on them everyone here, not even on Wikipedia. It is, tired, but it is the only way to learn everything and fully understand.

There is a question about how modern processors work . It is not so different from the beginning, although they had "several beginnings".

Also useful: How is a programming language developed?

How would anyone be able to write code in assembly when there was no text editor or anything?

The answer is a bit long, but it will be worth it. Let's take advantage to clear some concepts:

Any and all value in an electronic computer, whether valvulated or transistorized, is summarized as the presence of signal (usually 1 or true) or absence (0 or false).

When it was necessary to change the program, the programmers just rearranged the valves and the machine kept working [...]

In fact valves functioned like the transistors that succeeded them: their state could be changed by signals.

Programs, even on the most modern computers, are nothing more than values expressed in binary format that instruct the processor as other values (data) according to predetermined instructions.

From the article on programming of Wikipedia in English (free translation):

[...] programs [for the first generation of computers] had to be meticulously informed using the instructions (elementary operations) of the particular machine, often in binary notation.

Each computer model used different instructions (machine language) to accomplish the same task.

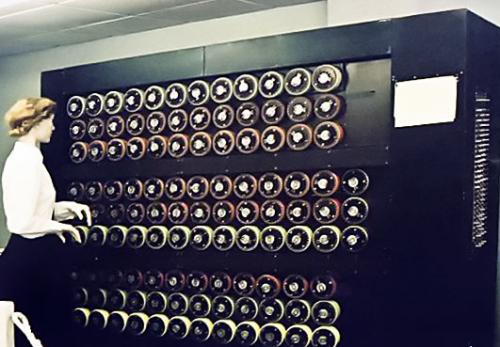

Computers like ENIAC used function tables - literally large bitmaps - to insert instructions:

The operator then programmed the computer by turning on and off hundreds of physical switches on these panels.

Cards succeeded the function tables, using the same mechanism: each card position it corresponded to a true / false value.

Later, assembly languages ( assembly ) were developed, allowing the programmer to specify each instruction in a text format, reporting abbreviations for each operation code instead of a binary number, specifying addresses symbolically (for example,

ADD X, TOTAL).

The first version of Assembly was not really text, just a set of one-character mnemonics which aggregated several instructions. Used by the EDSAC , it was called initial orders.

These function libraries have been expanding, with various mnemonics covering more and more sets of operations.

The first computer with an assembly instruction set was the IBM 650 . Using valves and punched cards, it contained a set of 650 instructions - a combination of 2 digits for Operation, 4 for the memory address and 4 for the next instruction. Note the similarity with modern Assembly languages:

# op|data|next

|addr|instruction

0001 - 00 0001 0000

0002 - 00 0000 0000

0003 - 10 0001 8003

0004 - 61 0008 0007

0005 - 24 0000 8003

0006 - 01 0000 8000

0007 - 69 0006 0005

0008 - 20 1999 0003

Read from the mnemonic format, it looked like this:

0004 RSU 61 0008 0007 Reseta o acumulador, subtrai do superior (8003) o valor 2019990003

0007 LD 69 0006 0005 Carrega valor 0100008000 no distribuidor

0005 STD 24 0000 8003 Armazena distribuidor no endereço 0000: Próxima instrução na

posição 8003

Introducing a program in assembly language is generally more convenient, faster, and less prone to human error than the direct use of machine language, but because a assembly language is little more than a different notation for a machine language, any two machines with instruction sets different also have different languages assembly .

The answer to your question then would be: initially directly in memory, followed by copies of bits from an external media (cards, for example).

The steps described so far explain how computers have become sophisticated enough to process command sets. Let's now go to your second question:

[...] The result of a mount is another file in text format with 0s and 1s only? Or are electrical impulses sent to the valved machine.

Everything in a computer is binary signals via electrical impulses, or representations of these states. Even a text file, where letters and symbols are represented by... binary codes.

In the beginning displays were rows of luminescent valves used to display the state of a particular bit within the computer . However, as the technology that supported televisions at the time used valves to display images, soon the technology was migrated to the universe of computers: thus was born the VDU (video display terminal); the first commercial model was the Datapoint 3300, launched in 1967.

Another technology was absorbed from the teletypes and typewriters of the time, the keyboard. ENIAC was the first computer to make use of one, both for input of data when to output, such as printer.

The marriage of the two technologies - where mnemonic instructions could be viewed on a monitor, and a keyboard that allowed for agile input of instructions - enabled the advancement of device programming methods.

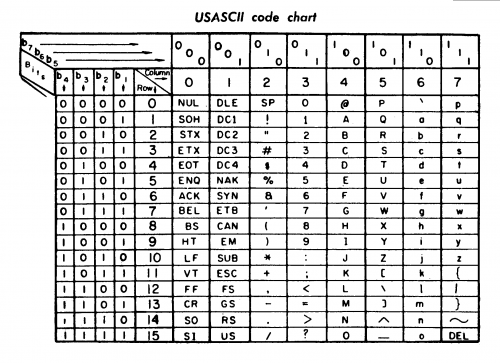

Remember that text files are actually binary code sequences? One of the first codes to be widely used was the ASCII , created in 1960 and derived from the Telegraph code.

ASCII chart from a 1972 printer manual (b1 is the least significant bit).

In this format the letter A is represented by the value 65, B by 66 and so on.

A first program, written in machine language (most likely using operator mnemonics), was created to allow data from the keyboard to be allocated in a memory space, in the format of source code . Let's go to an example in C:

volatile int x, y;

int

main ()

{

x = foo (y);

return 0;

}

This program copied, character by character, the keyboard input to memory-without interpreting its contents, just displaying it on the screen. For example, the word 'volatile' would be stored like this:

v 01110110

o 01101111

l 01101100

a 01100001

t 01110100

i 01101001

l 01101100

e 01100101

Extremely inefficient, right? Another part of the program read these addresses, compiling them , and turned them into the mnemonics used by the processor.

Our example in C it would look like this:

5 {

6 x = foo (y);

0x0000000000400400 <+0>: mov 0x200c2e(%rip),%eax # 0x601034 <y>

0x0000000000400417 <+23>: mov %eax,0x200c13(%rip) # 0x601030 <x>

7 return 0;

8 }

0x000000000040041d <+29>: xor %eax,%eax

0x000000000040041f <+31>: retq

0x0000000000400420 <+32>: add %eax,%eax

0x0000000000400422 <+34>: jmp 0x400417 <main+23>

The 0xn representation is the representation of mnemonic operations (such asmov, xor and retq) in the way they are viewed by the processor.

The operator then moved the execution pointer to the compiled result, executing the binary code.

The rest, as they say, is history.