What are the main differences between Unicode, UTF, ASCII, ANSI?

What are the main differences between Unicode, UTF, ASCII, ANSI "" encodings?

Are all of them really encodings or are some just "sub-categories" of others?I do not intend to know all the details of each, only a brief of each and, if possible, how they differ from each other.

2 answers

ASCII

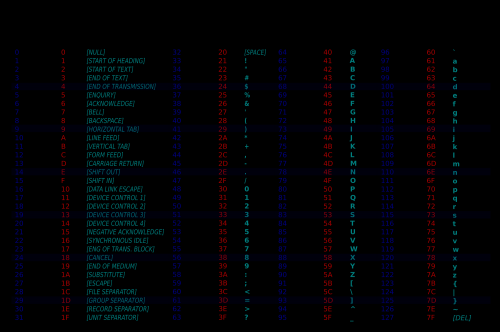

American Standard Code for Information Interchange. As the name already says it is a standard that caters well to Americans. It goes from the number 0 to 127, the first 32 and the last are considered control, the rest represent "printable characters", that is, recognized by humans. It's pretty universal. It can be represented with only 7 bits, although one byte is usually used.

It is clear that it has no accents, that the Americans don't even use it.

ANSI

There is no such encoding.

The term is American National Standards Institute, the equivalent of our ABNT .

Since he established some character usage standards to meet diverse demands, many encodings (actually code pages) end up being generically called ANSI, even to make a counterpoint to Unicode which is another entity with another type of encoding. Usually these code pages are considered extensions to ASCII, but nothing prevents any specific encoding from being 100% compatible.

Again it was an American solution to deal with international characters since ASCII did not meet well.

Depending on the context, and even the time, it means something different. Today the term is often used for Windows 1252 since much of the Microsoft documentation refers to its encoding as ANSI. ISO 8859-1 , also known as Latin1, is also widely used.

All encodings called ANSI that I know can be represented by 1 byte.

So it depends what you're talking about.

UTF

Alone doesn't mean much. It Is Unicode Transformation Format. There are some encodings that use this acronym. UTF-8, UTF-16 and UTF-32 are the most well-known encodings.

Nos Wikipedia articles have several details. They are quite complex and hardly anyone knows how to use right in all its fullness, including me. Most implementations are wrong and / or do not meet the standard, especially UTF-8.

UTF-8 supports ASCII (it accepts ASCII as valid characters). But not with any other character encoding system. It is the most complete and complex coding that exists. Some are in love with her (and this is the best term that I thought) and others hate it, even if they recognize its usefulness. It is complex for the human (programmer) to understand and for the computer to cope.

The size of UTF - 8 and UTF-16 is variable, the first from 1 to 4 bytes (depending on the version could go up to 6 bytes, but in practice it does not happen) and the second is 2 or 4 bytes. UTF - 32 always has 4 bytes.

There is a comparison between them . I don't know how much it takes. It's certainly not complete.

Unicode

Is a standard for representation of texts established by a consortium . Among the norms established by him are some encodings. But in fact he refers to much more than that. It originated from the Universal Coded Character Set or UCS which was much simpler and solved almost everything you needed.

A article that everyone should read, even if you don't agree with everything there is.

Supported character sets are separated into planes. One can have an overview about them in the Wikipedia article. Being the plane 0 or BMP the most used, skyrocketing.

All these standards are formalized by ISO which is the international entity that regulates technical standards.

Is related to UTF.

As the @randrade linked, I did a quick translation, I removed some things about specific programming and about opinionated things, I also tried not to let the translation to the letter (my English is +or - I will review).

"Unicode " is not a specific encoding, it refers to any encoding that uses the Union of codes to form a character.

UTF-16: are 2 bytes per " unit of code".

-

UTF-8 : in this format each character varies between 1 and 4 bytes. Whereas ASCII values use 1 byte each

UTF-32: this format uses 4 bytes per "code point" (probably to form a character).

ASCII: uses a single byte for each character, it uses only 7bits to represent all characters (Unicode uses from 0-127), it does not include accents and multiple characters special.

- ANSI: there is no fixed standard for this encoding, there are several types actually. A common example would be Windows-1252 .

Other type you can find information at unicode.org and possibly this link may be useful to you code charts.

Detail:

1 byte equals 8 bits