What is it and what is it for PCA, LDA, ICA?

I am conducting research on detection and facial recognition for future implementation, my research came to the algorithm of Viola Jones and reading more I came to the concepts of:

- PCA-Main Component Analysis

- LDA-linear discriminant analysis

- ICA-analysis of independent components

I was in doubt what is it, how is it, what is the use of it for?

In an image, I do I need to run the algorithm of Viola Jones and then the component analyzes or is it the other way around?

1 answers

First of all, the Viola-Jones algorithm was explained in this other answer. In fact, this answer deals especially with the difference between detection and classification (the second term is related to the other concepts that you list in your question).

Detection vs recognition

These terms are commonly confused, especially when it comes to the problem domains of computer vision involving face images. Detection comes to find (locate, know if you have or not, etc.) an object in an image. In the case of this problem domain, we are talking about finding/locating (human) faces in images. Recognition is about knowing to whom a face belongs, that is, recognizing who the individual is after having made the detection (that is, located the region of the face in the whole image). The terms are easily confused because "recognize" could be understood as "realize that there is a face", but in the literature it is used in sense of identification.

PCA

PCA (from main Component Analysis) is a statistical technique that seeks to find the strongest (changing) patterns in a mass of data.

In a fairly simple analogy, PCA does for a mass of data more or less what the derivative does for a function: it provides one (or more) measure(s) of variation. Imagine the parable that describes the movement of a projectile thrown upwards. If this movement is described by a quadratic function of the velocity of the projectile, that is, space in time (in km per hour, for example), its derivative is acceleration because it is the variation of velocity. There at the highest point of the path the velocity of the projectile is zero, but the acceleration is the same (acceleration of gravity) because the projectile slows down on the rise, stops and accelerates on the descent. A satellite launched into space has acceleration only while leaving Earth orbit, since then the acceleration becomes zero and the speed constant.

For then, roughly the PCA finds / calculates the vectors (direction and direction) that denote the main axes of variation of the data produced by a function with many variables (two or more). The first component will always indicate the axis with the greatest variation, the second component the second axis with the greatest variation, and so on (up to the maximum amount of variables).

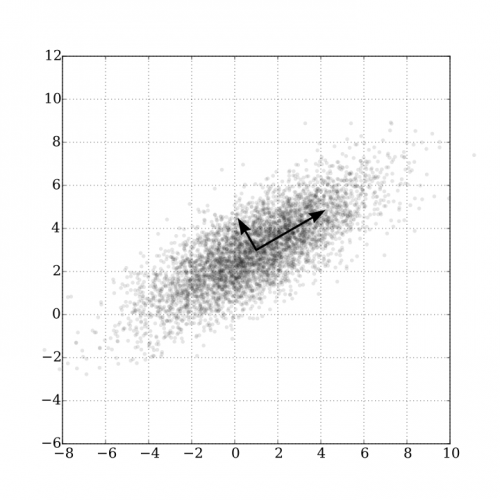

The following figure, taken from the Wikipedia article on the method, illustrates exactly that. In this example, the data is characterized by two variables (or features) and so is plotted on a two-dimensional graph. As you can see, there is an observable pattern in the distribution of these points, because although it is not really linear there is a diagonal scattering from bottom to top and from left to right. This is the main axis. The second axis is the spread on the opposite diagonal (from bottom to top, but from right to left), much smaller as seen by the magnitude of the vector of this PCA component.

And what's that for? Well, to begin with these two vectors represent very well (and with only two "values" - remember that each vector has three properties: Direction, Sense and magnitude, or modulus) how the data behaves. So they could by themselves be used to represent a dataset and compare it to another different dataset. But in the case of face recognition, PCA is used with the most common purpose of this technique: to reduce the dimensions of the problem.

In the previous Wikipedia example, the problem had two dimensions (two axes for the data). Processing these points is not so expensive because the number of dimensions is low. But still, using PCA one could throw away the second component and use only the first to project the original points on this new "axis", reducing the dimensions of the problem from 2 to only 1. Yes, in this illustrative example this could be unnecessary, but imagine in a problem with hundreds or thousands of dimensions. Image processing is a complex problem because even a small 10x10 image has 100 pixels, each of which has 3 values in a color (RGB) image! Using PCA it is possible to reduce the dimensions of the problem considerably only keeping the most relevant components to represent a face.

Or algorithm eigenface does just that: applies PCA to a set of training images and reduces them to their main color variations for each individual, then identifies/classifies a new image based on distance comparison (Euclidean even) in that "eigenfaces space" (the closest "point" in that space is the desired face/individual). The following figure illustrates some Eigenfaces of the link's Wikipidia page previous.

LDA

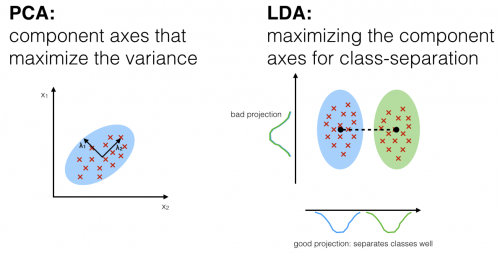

The PCA only finds the main axes of variation of a mass of data in any dimension. It does not discriminate whether these data belong to the same class or not, so this method is very useful for reducing the dimension of the problem but not for classification (read more about classification in this other question).

LDA (from Linear Discriminant Analysis) does a different approach that considers the existence of classes for the data. IT projects the probability distribution of the data on the axes, as illustrated in the following figure (reproduced of this article ), and therefore not only maintains but highlights a linear separation of the data if it exists.

In this sense, this method can be used directly to produce a classifier (a system trained from example data that is able to predict which class - in the case of faces, to which individual-a new input data belongs). It is very common, in fact, to use PCA to reduce the size of the problem (and make it easier to process computationally) and then use LDA to classify the data.

Conclusion

I'm not really an expert on these methods (I used PCA in PhD a few times, but never used LDA), so my answer may contain some small error. I also tried to make the subject palatable based on what I know of him, but remember that I made simplifications for this (and when I'm going to study for good, read straight about concepts like eigenvectors/eigenvectors, covariance matrices), and probability distribution.

Has a lot of cool material that you can use to learn all this very well. About PCA I suggest this pretty visual tutorial , and also this tutorial on OpenCV's own website (which is focused on using PCA on images in general, not necessarily on faces-note how it is possible to calculate the main orientation of objects in the image). About LDA I suggest this cool video (in addition to the article already referenced in the image previously).

How will you notice I haven't talked about {, and the reason is that I don't really know anything about this method at all. I suppose it's some alternative or complement to the other two, but I may be wrong. Maybe someone supplement with a new answer.

And in your final question (" in an image, do I need to run Viola Jones's algorithm and then the component analyses or is it the other way around?"), you need to first run the Viola-Jones algorithm and then PCA and/or LDA. the idea is that first you detect (find) the region with the face, crop the image to use only that area and then apply the other algorithms. This way you save processing and facilitates the other algorithms (the image used is smaller and only has what really matters).