Why does the Sigmoid activation function work, but ReLU does not?

There is such a code:

from keras.models import Sequential

from keras.layers import Dense

import numpy

from numpy import exp, array, random, dot

# задаем для воспроизводимости результатов

numpy.random.seed(2)

# разбиваем датасет на матрицу параметров (X) и вектор целевой переменной (Y)

X = array([[0, 0, 1], [1, 1, 1], [1, 0, 1], [0, 1, 1]])

Y = array([[0, 1, 1, 0]]).T

# создаем модели, добавляем слои один за другим

model = Sequential()

model.add(Dense(1, input_dim=3, activation='relu')) # входной слой требует задать input_dim

# компилируем модель, используем градиентный спуск adam

model.compile(loss="binary_crossentropy", optimizer="adam", metrics=['accuracy'])

# обучаем нейронную сеть

model.fit(X, Y, epochs = 10000, batch_size=4)

# оцениваем результат

scores = model.evaluate(X, Y)

print("\n%s: %.2f%%" % (model.metrics_names[1], scores[1]*100))

pre = model.predict(array([[0, 1, 0]]))

print(pre)

precl = model.predict_classes(array([[1,0,0]]))

print(precl)

And when I use the activation function ReLU, the accuracy never increases above 50% and the answers are wrong. When I use Sigmoid, everything converges immediately.

I read that ReLU is now preferred in most cases. What is wrong in my case?

Results (should be: 0, 1):

For ReLU:

accuracy: 50.00%

[[0.9953842]]

[[0]]

For Sigmoid:

accuracy: 100.00%

[[0.13338517]]

[[1]]

1 answers

Different activation functions are used in different cases. As always, there is no silver bullet universal activation function.

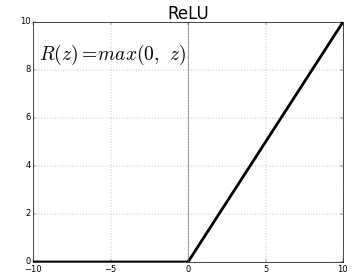

ReLU (Rectified Limear Unit) is usually used for hidden layers NS, but not for output layer.

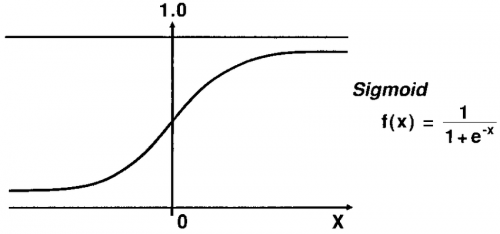

In your case, you have built a binary classification model that should output the probability of a positive outcome (in binary classification, we can have either a negative False or positive True result). Therefore, for binary classification problems for the output layer, it is convenient to use the sigmoid function as the activation function. The range of values of the sigmoid: (0, 1) is just what is needed for the case of binary classification.

Sigmoid graph:

ReLU graph:

PS English article to help you understand the various functions activations